Developers are building at AI speed, using tools like Copilot, Cursor, and Claude as if they’re full-time teammates. But here’s the uncomfortable question no one’s asking:

If your developers are using AI every day… is your SDLC even aware of it?

Because if it’s not, then congrats, you’re optimizing parts of the engine while ignoring the vehicle.

I still remember the first time I heard “SDLC.” I thought it stood for Standard Developer Life Crisis. Turns out, it meant Software Development Life Cycle, basically a fancy checklist to keep dev chaos from burning the house down.

Plan → Build → Test → Deploy → Breathe.

It worked. For a while.

Fast-forward to now: devs type a prompt like “Build me a CRUD app” into Cursor, and it’s done before their coffee cools.

But our SDLC? It still acts like every line was written by hand. We're still estimating like it's 2015, reviewing code like it's poetry, and getting surprised when features ship in hours instead of days.

You’ve got AI-powered teams zooming ahead, while your process is stuck in slow motion.

It’s not that SDLC is broken; it just hasn’t caught up, just like this person handling modern VRs with an old computer.

The Disconnect: Your SDLC Is Still Living in a Pre-AI World

AI hasn’t just tweaked how we write code, it has overhauled the “how” of software development itself. But while the code has evolved, the systems managing that code are still stuck in the pre-AI era, running playbooks written for a time when “automation” meant a bash script and a Jenkins job.

Let’s zoom in on what’s really happening today.

Developers aren’t writing code line by line anymore, they’re prompting it. One well-worded comment like “Build a CRUD service for product inventory with validations and unit tests” spits out hundreds of lines in seconds.

Debugging, once a back-and-forth of breakpoints, print statements, and coffee-fueled guesswork, is now done with AI pair programming, Cursor or Copilot catching errors as they’re typed.

Even test cases , the first thing we all quietly agreed to drop when a sprint went sideways (don’t worry, we did it too 😅), are now magically back on the menu. Just type (// write a test for this) and boom: you’ve got mocks, edge cases, and more structure than most legacy apps. AI brought testing back from the dead, no guilt trips from QA required.

And yet… your SDLC is still partying like it’s 2015. Manually reviewing AI-generated code, estimating tasks like they were handcrafted, and acting shocked when something ships in hours instead of days. It’s like upgrading your devs to self-driving mode and then asking them to walk the route just to be sure.

Picture this:

In traditional development, a developer spends a full day building a REST API, writing routes, validations, pagination, error handling, and logging. That’s eight hours of focused work. But now? An AI handles the scaffolding, the developer tweaks it for context, and it’s done in under an hour. Still, the sprint planning board reflects an “8-point” estimate because the assumption is that someone wrote all that.

This mismatch between how code is created and how it’s managed, introduces friction at every level of the lifecycle. Reviews take longer because they’re applied with the wrong lens. QA misses bugs because it expects human logic. Estimations break velocity charts because the output looks the same, but the effort behind it is radically different.

We’re in an AI-first dev era. But your SDLC is still wearing flip phones.

What an AI-Aware SDLC Actually Looks Like

If developers are driving Teslas, your SDLC needs to stop acting like a roadside mechanic inspecting carburetors.

An AI-aware SDLC isn’t just about allowing devs to use Copilot or Cursor. That’s surface-level. The real transformation begins when the entire software lifecycle, planning, coding, reviewing, testing, and shipping, adapts to the new reality: AI is now part of the team.

At Hivel, we’ve spent months studying exactly how AI is changing the way code is written, and more importantly, how the current SDLC breaks when it can’t see or support that change. What we’ve learned is simple: to fully unlock AI’s potential, you need visibility, context, and feedback loops that treat AI like part of the team, not just a silent helper.

So what does that actually look like?

1. Code Reviews That Know What’s Human, and What’s Not

You can’t treat AI-generated code like it’s handcrafted artisanal software. Let’s drop the illusion that every line came from deep thought and late-night caffeine.

For years, our first line of defense in code quality, especially in the "Test" phase of the SDLC, has often been linters – those rule-based gatekeepers checking for syntax and style.

Yet, their rigid, rule-based approach often falls short. By design, they are excellent at policing surface-level issues but remain fundamentally blind to the code's deeper intent or architectural context. This inherent limitation highlights why our SDLC processes need a more intelligent, context-aware approach in the AI era.

Modern code reviews aren’t about nitpicking indentation or naming conventions, they’re about validating what actually matters: intent, integration, security, and whether the AI took a creative detour nobody asked for.

In an AI-aware review flow, the focus shifts completely.

It’s no longer “who wrote this?”, it’s:

- Is the logic sound and contextually relevant?

- Does this play well with existing modules and systems?

- Did the AI miss an edge case or blindly follow a common pattern?

Reviewers are no longer gatekeepers of syntax, they’re guardians of context and safety. By focusing on intent over authorship, reviews become faster, sharper, and more in tune with how modern code is actually written.

And once we embraced this mindset, something wild happened; even our designers started shipping frontend code to production.

Because let’s face it, it’s no longer about who wrote the code.

It’s about whether it deserves to ship.

2. Estimation That Reflects AI-Accelerated Velocity

Story points are used to reflect effort, how long something would take a human to build from scratch. But when AI handles 70% of the boilerplate in under five minutes, that model starts to fall apart.

In an AI-aware SDLC, estimation evolves.

It’s no longer just about time, it’s about where real human thinking is required.

- Estimation accounts for human input, not just total output.

- Velocity spikes are met with curiosity, not suspicion.

- Sprint planning adapts to the new rhythm, fast builds, fast iterations, and faster feedback loops.

- Planning adapts to the new cadence of delivery, where output is AI-augmented.

That 13-point story wrapped in 2 hours?

It’s not an outlier. It might just be your new baseline.

Because in an AI-augmented workflow, speed isn’t suspicious. It’s a signal that your process is finally catching up with your tools.

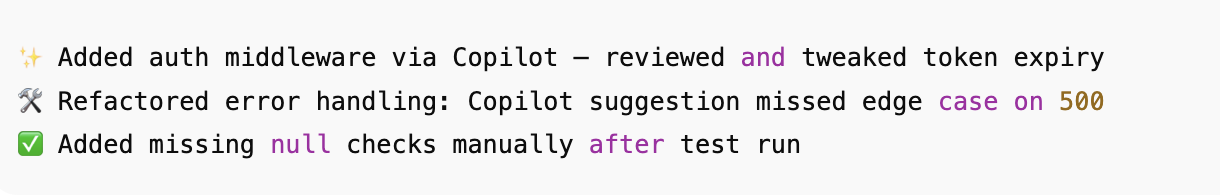

3. Git Logs That Actually Tell a Story

Let’s be real: Most commit histories today look like a developer bargaining with the repo gods:

It’s funny until you’re the one trying to review that PR.

In today’s AI-powered world, this kind of commit trail does more harm than good. When code is partially generated, lightly edited, or heavily refactored, you need more than just a message saying “fixed stuff.” You need context.

An AI-aware Git workflow elevates version control from a change log to a narrative. Imagine this instead:

Now reviewers know what was AI-generated, what was modified, and where the dev actually stepped in to fix or refine. It’s like going from a blurry security cam to a 4K replay with commentary.

The result?

Cleaner reviews. Faster merges. Fewer “Hey, what happened here?” messages in Slack.

This is Git with receipts, not just who changed what, but how and why.

Because in an AI-assisted world, authorship is a collaboration, and your logs should reflect that.

4. Prompt Hygiene Over Code Hygiene

In the age of AI, how you ask matters more than how you code.

A weak prompt? It gives you shallow, rigid code.

A strong prompt? That’s reusable, scalable, and reads like it was written by your staff engineer on a good day.

Prompts are no longer disposable throwaways. They’re design decisions. And yet, most teams have zero visibility into what was asked, how it evolved, or why the AI made the choices it did.

Here’s where things break: two developers are asked to implement the same feature. One gets elegant, well-structured code. The other ends up with spaghetti and a side of global variables. Same task. Same tool. Wildly different outcomes.

The problem isn’t in the code, it’s in the conversation they had with their AI pair programmer. That’s why prompt history needs to be treated like commit history. Teams should review, refine, and reuse good prompts the same way they treat clean code patterns or shared utility functions.

Prediction: One day, prompt reviews will be as normal as code reviews. And the best prompt writers? They’ll be your next senior engineers.

Meet Hivel: The Missing Link Between AI Coding and Real Engineering Intelligence

You’ve got devs building at AI speed

You’ve got story points getting crushed in hours.

You’ve got Copilot and Cursor generating, refactoring, debugging, and shipping.

But here’s the punchline: Nobody’s tracking how it’s happening.

That’s where Hivel steps in.

Hivel isn’t just another metrics dashboard, it’s an AI-aware lens for your entire SDLC. It plugs into GitHub Copilot, Cursor, and your version control system, and gives you a unified view of how your code is actually being built, by humans, by AI, or by both.

Here’s how Hivel brings your SDLC into the AI era, clearly, transparently, and with real impact:

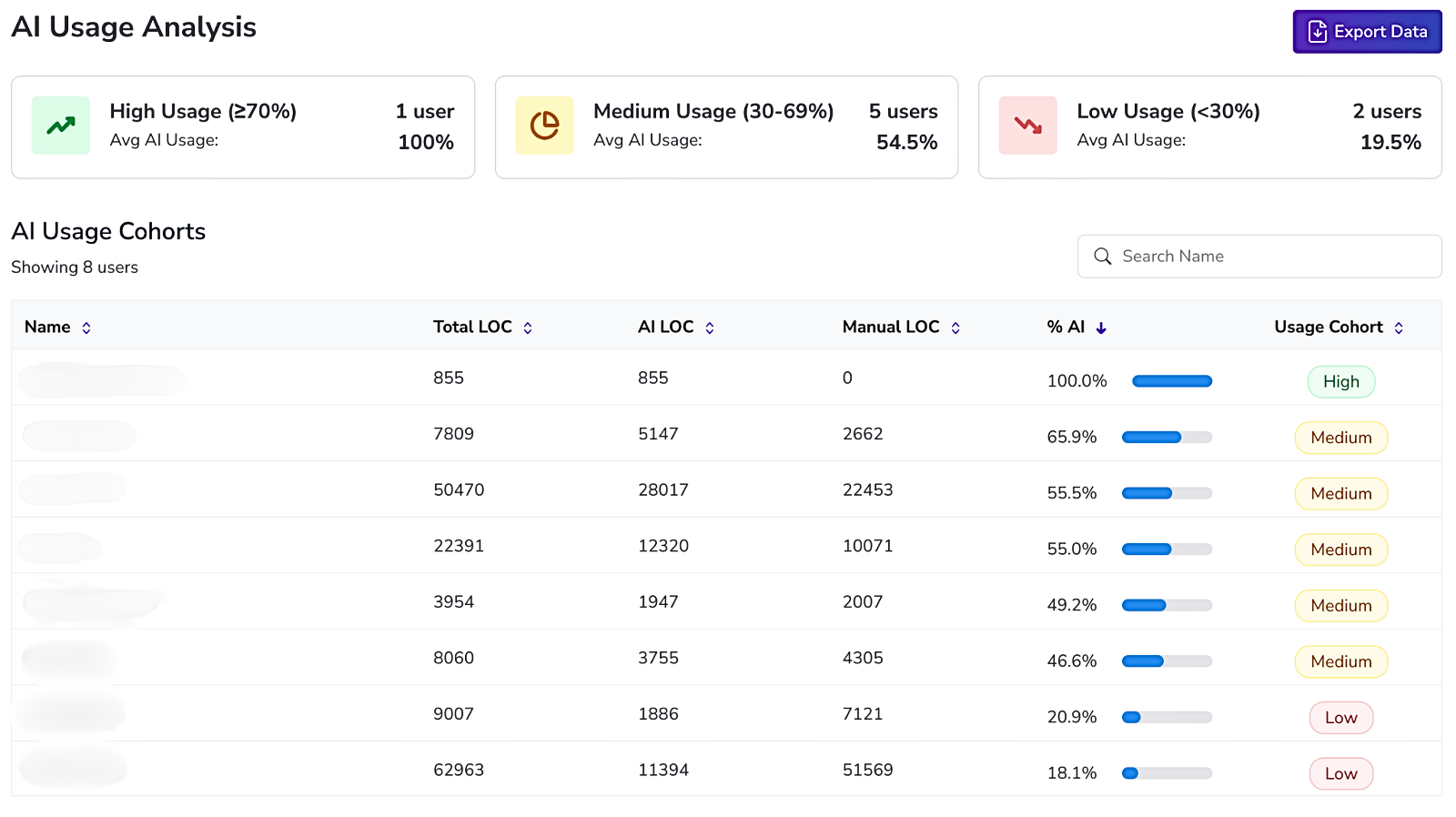

1. Track AI Usage by Developer, Team, or Org

Not all AI usage is created equal.

Some devs are flying full-pilot. Others are still tapping the brakes. Hivel segments your teams into AI usage cohorts, High, Medium, Low, based on real code-level activity.

You’ll see:

- Total lines of code written

- % of that code written by AI

- Who’s leveraging AI most (and who might need support or onboarding)

Slice it by org, team, or individual, and suddenly, you’ve got a map of AI adoption across your entire engineering organization.

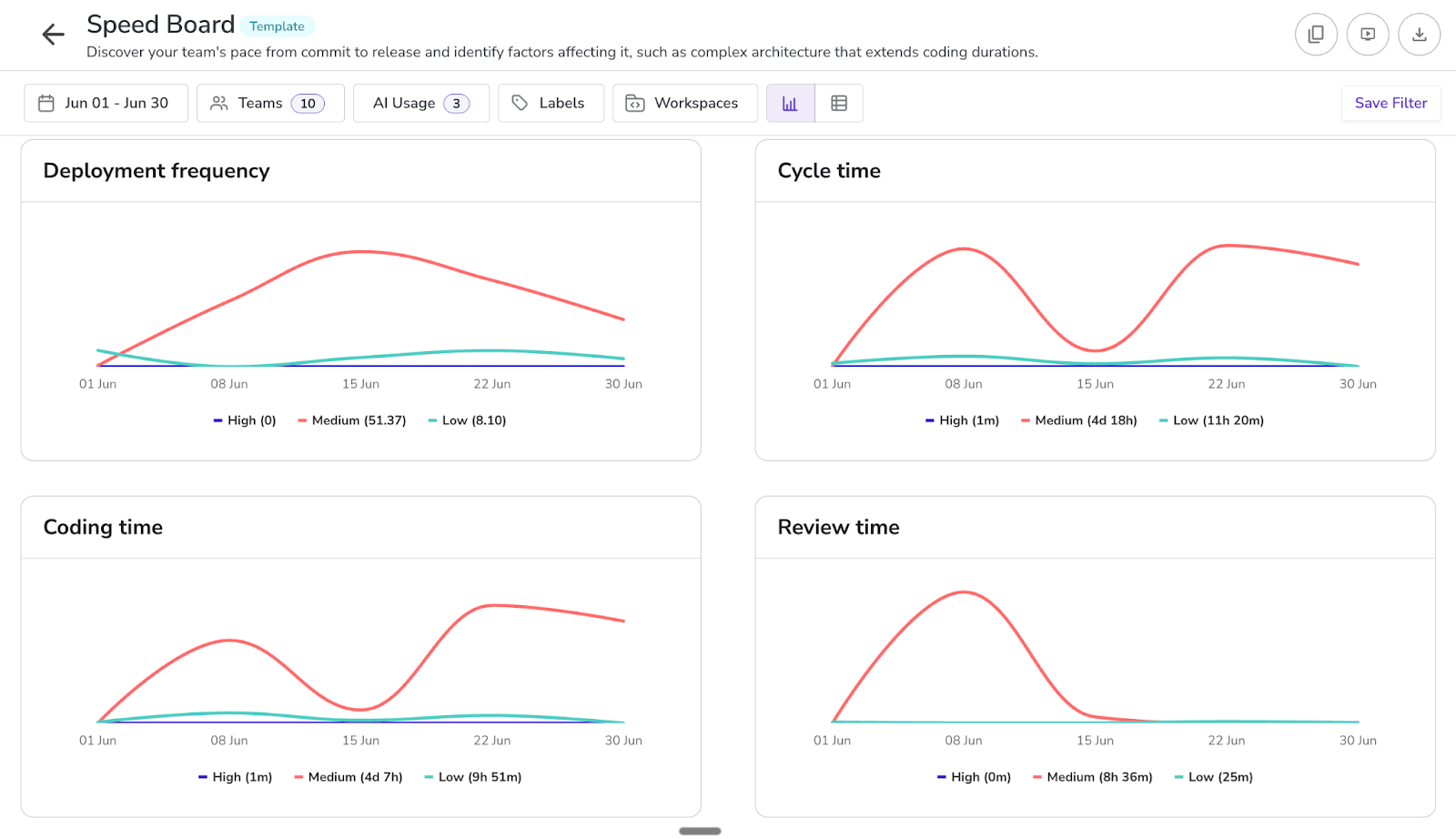

2. Understand the Impact of AI on Real Engineering Metrics

AI isn’t just generating code, it’s reshaping how fast teams ship.

Hivel lets you cohort users by AI usage and directly compare engineering metrics. The result?

Spoiler: teams with higher AI usage are shipping faster, reviewing quicker, and completing story points with less friction.

Want to debug productivity?

This is how you trace it, back to the tools, the patterns, and the behaviors that actually move the needle.

3. Plan Better with AI vs Manual Code Insight

Estimates only work if your assumptions reflect reality.

When 60% of a feature is scaffolded by AI in minutes, planning off manual heuristics makes no sense.

Hivel shows you:

- How much of your codebase is AI-written vs manually authored

- When to adjust point estimates to reflect reality

- Where velocity is real, and where it’s just artificial acceleration with no validation

This isn’t about punishing speed.

It’s about understanding why something was fast, so you can plan smarter next time.

4. Measure Real AI Adoption and Activation

AI isn’t magic if no one uses it.

Hivel tracks active vs inactive AI users over time, so you can:

- Monitor AI tool engagement over time

- Drive adoption through enablement and coaching

- Identify friction before it turns into frustration

This isn’t just about usage, it’s about influence.

Because your most effective AI users?

They’re your blueprint for scaling AI productivity across your org.

The Impact of Aligning SDLC with AI-Driven Development

Upgrading your SDLC isn’t just about keeping up with AI, it’s about unlocking smoother delivery, faster feedback loops, and smarter decision-making across the board.

With Hivel, you don’t just see velocity, you understand it.

You get visibility into what was AI-assisted, where human insight was needed, and how work actually happened. That means fewer blind spots, more accurate estimates, and better alignment between planning, execution, and outcomes.

No more guessing whether something was fast because of AI or just unusually efficient.

No more outdated playbooks slowing down teams who are already building at tomorrow’s speed.

When your SDLC is AI-aware, you don’t just ship faster. You plan better, review smarter, and scale what works.

Upgrade your SDLC. The AI dev era is already here.

Developers have already upgraded, whether your process is ready or not.

Also read: Hidden Costs of Code Reviews: Why Manual Processes Fail in the AI Era

.png)

.png)